The Ultimate Guide to Image Annotation for Computer Vision:

Applications, Methods, and Categories

This guide handpicks concepts and presents them in the simplest ways possible so you have good clarity on what it is about. It helps you have a clear vision of how you could go about developing your product, the processes that go behind it, the technicalities involved, and more. So, this guide is extremely resourceful if you are:

Introduction

Have you used Google Lens recently? Well, if you haven’t, you would realize that the future we have all been waiting for is finally here once you start exploring its insane capabilities. A simple, ancillary feature part of the Android ecosystem, the development of Google Lens goes on to prove how far we have come in terms of technological advancement and evolution.

From the time we simply stared at our devices and experienced only one-way communication – from humans to machines, we have now paved the way for non-linear interaction, where devices can stare right back at us, analyze and process what they see in real-time.

They call it computer vision and it’s all about what a device can understand and make sense of real-world elements from what it sees through its camera. Coming back to the awesomeness of Google Lens, it lets you find information about random objects and products. If you simply point your device camera onto a mouse or a keyboard, Google Lens would tell you the make, model, and manufacturer of the device.

Besides, you could also point it to a building or a location and get details about it in real-time. You could scan your math problem and have solutions for it, convert handwritten notes into text, track packages by simply scanning them and do more with your camera without any interface whatsoever.

Computer vision doesn’t end there. You would have seen it on Facebook when you try to upload an image to your profile and Facebook automatically detects and tags faces of you and that of your friends and family. Computer vision is elevating people’s lifestyles, simplifying complex tasks, and making the lives of people easier.

What is Image Annotation

Image annotation is used to train AI and machine learning models to identify objects from images and videos. For image annotation, we add labels and tags with additional information to images which will later on passed to computers to help them identify objects from image sources.

Image annotation is a building block of computer vision models, as these annotated images will serve as the eyes of your ML project. This is the reason why investing in high-quality image annotation is not just a best practice, but a necessity for developing accurate, reliable, and scalable computer vision applications.

To keep the quality levels high, image annotation is usually performed under the supervision of an image annotation expert with the help of various image annotation tools to attach useful information to images.

Once you annotate the image with relative data and categorize them in different categories, the resulting data is called structured data which is then fed to AI and Machine Learning models for the execution part.

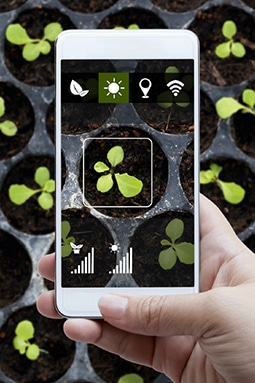

Image annotation unlocks computer vision applications like autonomous driving, medical imaging, agriculture, etc. Here are some examples of how image annotations can be used:

- Annotated images of roads, signs and obstacles can be used to train self-driving car models to navigate safely.

- For healthcare, annotated medical scans can help AI detect diseases early and can be treated as early as possible.

- You can use annotated satellite imagery in agriculture to monitor crop health. And if there’s any indication of diseases, they can be solved before they destroy the entire field.

Image Annotation for Computer Vision

Image annotation is a subset of data labeling that is also known by the name image tagging, transcribing, or labeling that Image annotation involves humans at the backend, tirelessly tagging images with metadata information and attributes that will help machines identify objects better.

Image Data

- 2-D images

- 3-D images

Types of Annotation

- Image Classification

- Object Detection

- Image Segmentation

- Object Tracking

Annotation Techniques

- Bounding Box

- Polyline

- Polygon

- Landmark Annotation

What kind of images can be annotated?

- Images & multi-frame images i.e., videos, can be labeled for machine learning. The most common types are:

- 2-D & multi-frame images (video), i.e., data from cameras or SLRs or an optical microscope, etc.

- 3-D & multi-frame images (video), i.e., data from cameras or electron, ion, or scanning probe microscopes, etc.

What Details Are Added To An Image During Annotation?

Any information that lets machines get a better understanding of what an image contains is annotated by experts. This is an extremely labor-intensive task that demands countless hours of manual effort.

As far as the details are concerned, it depends on project specifications and requirements. If the project requires the final product to just classify an image, appropriate information is added. For instance, if your computer vision product is all about telling your users that what they are scanning is a tree and differentiate it from a creeper or a shrub, annotated detail would only be a tree.

However, if the project requirements are complex and demand more insights to be shared with users, annotation would involve the inclusion of details like the name of the tree, its botanical name, soil and weather requirements, ideal growing temperature, and more.

With these pieces of information, machines analyze and process input and deliver accurate results to end-users.

Types of Image Annotation

There’s a reason why you need multiple image annotation methods. For example, there’s high-level image classification that assigns a single label to an entire image, especially used when there’s only one object in the image but you have techniques like semantic and instance segmentation that label every pixel, used for high-precision image labelling.

Apart from having different types of image annotations for different image categories, there are other reasons like having an optimised technique for specific use cases or finding a balance between speed and accuracy to meet the needs of your project.

Types of Image Annotation

Image Classification

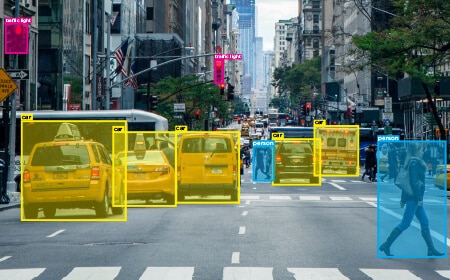

The most basic type, where objects are broadly classified. So, here, the process involves just identifying elements like vehicles, buildings, and traffic lights.

Object Detection

A slightly more specific function, where different objects are identified and annotated. Vehicles could be cars and taxis, buildings and skyscrapers, and lanes 1, 2, or more.

Image Segmentation

This is going into the specifics of every image. It involves adding info about an object i.e, color, location appearance, etc. to help machines differentiate. For instance, the vehicle in the center would be a yellow taxi on lane 2.

Object Tracking

This involves identifying an object’s details such as location and other attributes across several frames in the same dataset. Footage from videos and surveillance cameras can be tracked for object movements and studying patterns.

Now, let’s address each method in a detailed manner.

Image Classification

Image classification is a process of assigning a label or category to an entire image based on its content. For example, if you have an image having a main focus on a dog then the image will be labelled as “dog”.

In the process of image annotation, image classification is often used as the first step before more detailed annotations like object detection or image segmentation as it plays a crucial role in understanding the overall subject of an image.

For example, if you want to annotate vehicles for autonomous driving applications, you can pick images classified as “vehicles” and ignore the rest. This saves a lot of time and effort by narrowing down the relevant images for further detailed image annotation.

Think of it as a sorting process where you are putting images into different labelled boxes based on the main subject of an image which you will further be using for more detailed annotation.

Key points:

- The idea is to find out what the entire image represents, rather than localizing each object.

- The two most common approaches for image classification include supervised classification (using pre-labelled training data) and unsupervised classification (automatically discovering categories).

- Serves as a foundation for many other computer vision tasks.

Object Detection

While image classification assigns a label to the entire image, object detection takes it a step further by detecting objects and providing information about them. Apart from detecting objects, also assigns a class label (e.g., “car,” “person,” “stop sign”) to each bounding box, indicating the type of object the image is containing.

Let’s suppose you have an image of a street with various objects such as cars, pedestrians, and traffic signs. If you were to use image classification there, it would label the image as a “street scene” or something similar.

However, object detection would go one step forward and draw bounding boxes around each individual car, pedestrian, and traffic sign, essentially isolating each object and labelling each with a meaningful description.

Key points:

- Draws bounding boxes around the detected objects and assigns them a class label.

- It tells you what objects are present and where they are located in the image.

- Some popular examples of object detection include R-CNN, Fast R-CNN, YOLO (You Only Look Once), and SSD (Single Shot Detector).

Segmentation

Image segmentation is a process of dividing an image into multiple segments or sets of pixels (also known as super-pixels) so that you can achieve something which is more meaningful and easier to analyse than the original image.

There are 3 main types of image segmentation, each meant for a different use.

Semantic segmentation

It is one of the fundamental tasks in computer vision where you partition an image into multiple segments and associate each segment with a semantic label or class. Unlike image classification where you a single label to the entire image, semantic lets you assign a class label to every pixel in the image so you end up having refined output compared to image classification.

The goal of semantic segmentation is to understand the image at a granular level by precisely creating boundaries or contours of each object, surface, or region at the pixel level.

Key points:

- As all the pixels of a class are grouped together, it can not distinguish between different instances of the same class.

- Gives you a “holistic” view by labelling all pixels, but does not separate individual objects.

- In most cases, it uses fully convolutional networks (FCNs) that output a classification map with the same resolution as the input.

Instance segmentation

Instance segmentation goes a step beyond semantic segmentation by not only identifying the objects but also precisely segmenting and outlining the boundaries of each individual object which can be understood easily by a machine.

In instance segmentation, with every object detected, the algorithm provides a bounding box, a class label (e.g., person, car, dog), and a pixel-wise mask that shows the exact size and shape of that specific object.

It is more complicated compared to semantic segmentation where the goal is to label each pixel with a category without separating different objects of the same type.

Key points:

- Identifies and separates individual objects by giving each one a unique label.

- It is more focused on countable objects with clear shapes like people, animals, and vehicles.

- It uses a separate mask for each object instead of using one mask per category.

- Mostly used to extend object detection models like Mask R-CNN through an additional segmentation branch.

Panoptic segmentation

Panoptic segmentation combines the capabilities of semantic segmentation and instance segmentation. The best part of using panoptic segmentation assigns a semantic label and instance ID to every pixel in an image, giving you a complete analysis of the entire scene in one go.

The output of the panoptic segmentation is called a segmentation map, where each pixel is labelled with a semantic class and an instance ID (if the pixel belongs to an object instance) or void (if the pixel does not belong to any instance).

But there are some challenges as well. It requires the model to perform both tasks simultaneously and resolve potential conflicts between semantic and instance predictions which requires more system resources and is only used where both semantics and instances are required with time limitation.

Key points:

- It assigns a semantic label and instance ID to every pixel.

- Mixture of semantic context and instance-level detection.

- Generally, it involves the usage of separate semantic and instance segmentation models with a shared backbone.

Here’s a simple illustration suggesting the difference between Semantic segmentation, Instance segmentation and Panoptic segmentation:

Image Annotation Techniques

Image annotation is done through various techniques and processes. To get started with image annotation, one needs a software application that offers the specific features and functionalities, and tools required to annotate images based on project requirements.

For the uninitiated, there are several commercially available image annotation tools that let you modify them for your specific use case. There are also tools that are open source as well. However, if your requirements are niche and you feel the modules offered by commercial tools are too basic, you could get a custom image annotation tool developed for your project. This is, obviously, more expensive and time-consuming.

Regardless of the tool you build or subscribe to, there are certain image annotation techniques that are universal. Let’s look at what they are.

Bounding Boxes

The most basic image annotation technique involves experts or annotators drawing a box around an object to attribute object-specific details. This technique is most ideal to annotate objects that are symmetrical in shape.

Another variation of bounding boxes is cuboids. These are 3D variants of bounding boxes, which are usually two-dimensional. Cuboids track objects across their dimensions for more accurate details. If you consider the above image, the vehicles could be easily annotated through bounding boxes.

To give you a better idea, 2D boxes give you details of an object’s length and breadth. However, the cuboid technique gives you details on the depth of the object as well. Annotating images with cuboids becomes more taxing when an object is only partially visible. In such cases, annotators approximate an object’s edges and corners based on existing visuals and information.

Landmarking

This technique is used to bring out the intricacies in the movements of objects in an image or footage. They can also be used to detect and annotate small objects. Landmarking is specifically used in facial recognition to annotated facial features, gestures, expressions, postures, and more. It involves individually identifying facial features and their attributes for accurate results.

To give you a real-world example of where landmarking is useful, think of your Instagram or Snapchat filters that accurately place hats, goggles, or other funny elements based on your facial features and expressions. So, the next time you pose for a dog filter, understand that the app has landmarked your facial features for precise results.

Polygons

Objects in images are not always symmetrical or regular. There are tons of instances where you will find them to be irregular or just random. In such cases, annotators deploy the polygon technique to precisely annotate irregular shapes and objects. This technique involves placing dots across an object’s dimensions and drawing lines manually along the object’s circumference or perimeter.

Lines

Apart from basic shapes and polygons, simple lines are also used for annotating objects in images. This technique allows machines to seamlessly identify boundaries. For instance, lines are drawn across driving lanes for machines in autonomous vehicles to understand better the boundaries within which they need to maneuver. Lines are also used to train these machines and systems for diverse scenarios and circumstances and help them make better driving decisions.

Use Cases for Image Annotation

In this section, I will walk you through some of the most impactful and promising use cases of image annotation ranging from security, safety, and healthcare to advanced use cases such as autonomous vehicles.

Retail: In a shopping mall or a grocery store 2-D bounding box technique can be used to label images of in-store products i.e. shirts, trousers, jackets, persons, etc. to effectively train ML models on various attributes such as price, color, design, etc

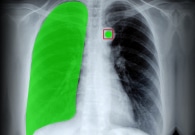

Healthcare: Polygon technique can be used to annotate/label human organs in medical X-rays to train ML models to identify deformities in the human X-ray. This is one of the most critical use cases, which is revolutionizing the healthcare industry by identifying diseases, reducing costs, and improving patient experience.

Self-Driving Cars: We have already seen the success of autonomous driving yet we have a long way to go. Many car manufacturers are yet to adopt the said technology which relies on Semantic segmentation that labels each pixel on an image to identify the road, cars, traffic lights, pole, pedestrians, etc., so that vehicles can be aware of their surroundings and can sense obstacles in their way.

Emotion Detection: Landmark annotation is used to detect human emotions/sentiments (happy, sad, or neutral) to measure the subject’s emotional state of mind at a given piece of content. Emotion detection or sentiment analysis can be used for product reviews, service reviews, movie reviews, email complaints/feedbacks, customer calls, and meetings, etc.

Supply Chain: Lines and splines are used to label lanes in a warehouse to identify racks based on their delivery location, this, in turn, will help the robots to optimize their path and automate the delivery chain thereby minimizing human intervention and errors.

How Do You Approach Image Annotation: In-house vs Outsource?

Image annotation demands investments not just in terms of money but time and effort as well. As we mentioned, it is labor-intensive that requires meticulous planning and diligent involvement. What image annotators attribute is what the machines will process and deliver results. So, the image annotation phase is extremely crucial.

Now, from a business perspective, you have two ways to go about annotating your images –

- You can do it in-house

- Or you can outsource the process

Both are unique and offer their own fair share of pros and cons. Let’s look at them objectively.

In-house

In this, your existing talent pool or team members take care of image annotation tasks. The in-house technique implies that you have a data generation source in place, have the right tool or data annotation platform, and the right team with an adequate skill set to perform annotation tasks.

This is perfect if you’re an enterprise or a chain of companies, capable of investing in dedicated resources and teams. Being an enterprise or a market player, you also wouldn’t have a dearth of datasets, which are crucial for your training processes to begin.

Outsourcing

This is another way to accomplish image annotation tasks, where you give the job to a team that has the required experience and expertise to perform them. All you have to do is share your requirements with them and a deadline and they’ll ensure you have your deliverables in time.

The outsourced team could be in the same city or neighborhood as your business or in a completely different geographical location. What matters in outsourcing is the hands-on exposure to the job and the knowledge of how to annotate images.

Image Annotation: Outsourcing vs In-House Teams – Everything You Need to Know

| Outsourcing | In-house |

|---|---|

| Additional layer of clauses & protocols need to be implemented when outsourcing project to a different team to ensure data integrity & confidentiality. | Seamlessly maintain the confidentiality of data when you have dedicated in-house resources working on your datasets. |

| You can customize the way you want your image data to be. | You can tailor your data generation sources to meet your needs. |

| You don’t have to spend additional time cleaning data and then start working on annotating it. | You will have to ask your employees to spend additional hours cleaning raw data before annotating it. |

| There is no overworking of resources involved as you have the process, requirements, and plan completely charted out before collaborating. | You end up overworking your resources because data annotation is an additional responsibility in their existing roles. |

| Deadlines are always met with no compromise in data quality. | Deadlines could be prolonged if you have fewer team members and more tasks. |

| Outsourced teams are more adaptive to new guideline changes. | Lowers the morale of team members every time you pivot from your requirements and guidelines. |

| You don’t have to maintain data generation sources. The final product reaches you on time. | You are responsible for generating the data. If your project requires millions of image data, it’s on you to procure relevant datasets. |

| Scalability of workload or team size is never a concern. | Scalability is a major concern as quick decisions cannot be made seamlessly. |

The Bottom Line

As you can clearly see, though having an in-house image/data annotation team seems more convenient, outsourcing the entire process is more profitable in the long run. When you collaborate with dedicated experts, you unburden yourself with several tasks and responsibilities you didn’t have to carry in the first place. With this understanding, let’s further realize how you could find the right data annotation vendors or teams.

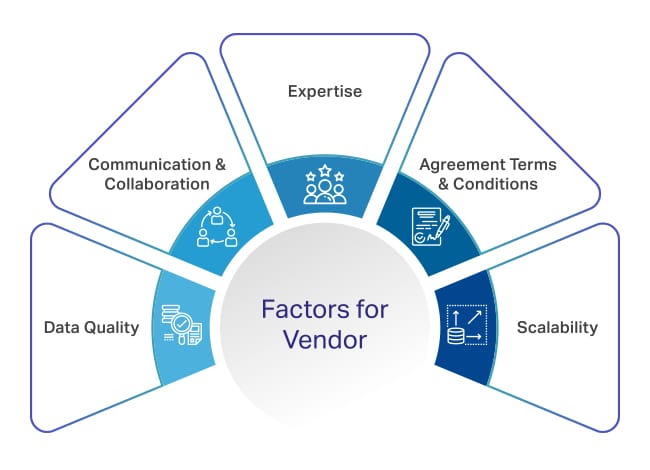

Factors To Consider When Choosing A Data Annotation Vendor

This is a huge responsibility and the entire performance of your machine learning module depends on the quality of datasets delivered by your vendor and the timing. That’s why you should pay more attention to who you talk to, what they promise to offer, and consider more factors before signing the contract.

To help you get started, here are some crucial factors you should consider.

Expertise

One of the primary factors to consider is the expertise of the vendor or team you intend to hire for your machine learning project. The team you choose should have the most hands-on exposure to data annotation tools, techniques, domain knowledge, and experience working across multiple industries.

Besides technicalities, they should also implement workflow optimization methods to ensure smooth collaboration and consistent communication. For more understanding, ask them on the following aspects:

- The previous projects they have worked on that are similar to yours

- The years of experience they have

- The arsenal of tools and resources they deploy for annotation

- Their ways to ensure consistent data annotation and on-time delivery

- How comfortable or prepared they are in terms of project scalability and more

Data Quality

Data quality directly influences project output. All your years of toiling, networking, and investing come down to how your module performs before launching. So, ensure the vendors you intend to work with deliver the highest quality datasets for your project. To help you get a better idea, here’s a quick cheat sheet you should look into:

- How does your vendor measure data quality? What are the standard metrics?

- Details on their quality assurance protocols and grievance redressing processes

- How do they ensure the transfer of knowledge from one team member to another?

- Can they maintain data quality if volumes are subsequently increased?

Communication And Collaboration

Delivery of high-quality output does not always translate to smooth collaboration. It involves seamless communication and excellent maintenance of rapport as well. You cannot work with a team that does not give you any update during the entire course of the collaboration or keeps you out of the loop and suddenly delivers a project at the time of the deadline.

That’s why a balance becomes essential and you should pay close attention to their modus operandi and general attitude towards collaboration. So, ask questions on their communication methods, adaptability to guidelines and requirement changes, scaling down of project requirements, and more to ensure a smooth journey for both the parties involved.

Agreement Terms And Conditions

Apart from these aspects, there are some angles and factors that are inevitable in terms of legalities and regulations. This involves pricing terms, duration of collaboration, association terms, and conditions, assignment and specification of job roles, clearly defined boundaries, and more.

Get them sorted before you sign a contract. To give you a better idea, here’s a list of factors:

- Ask about their payment terms and pricing model – whether the pricing is for the work done per hour or per annotation

- Is the payout monthly, weekly, or fortnightly?

- The influence of pricing models when there is a change in project guidelines or scope of work

Scalability

Your business is going to grow in the future and your project’s scope is going to expand exponentially. In such cases, you should be confident that your vendor can deliver the volumes of labeled images your business demands at scale.

Do they have enough talent in-house? Are they exhausting all their data sources? Can they customize your data based on unique needs and use cases? Aspects like these will ensure the vendor can transition when higher volumes of data are necessary.

Wrapping Up

Once you consider these factors, you can be sure that your collaboration would be seamless and without any hindrances, and we recommend outsourcing your image annotation tasks to the specialists. Look out for premier companies like Shaip, who check all the boxes mentioned in the guide.

Having been in the artificial intelligence space for decades, we have seen the evolution of this technology. We know how it started, how it is going, and its future. So, we are not only keeping abreast of the latest advancements but preparing for the future as well.

Besides, we handpick experts to ensure data and images are annotated with the highest levels of precision for your projects. No matter how niche or unique your project is, always be assured that you would get impeccable data quality from us.

Simply reach out to us and discuss your requirements and we will get started with it immediately. Get in touch with us today.

Let’s Talk

Frequently Asked Questions (FAQ)

Image annotation is a subset of data labeling that is also known by the name image tagging, transcribing, or labeling that involves humans at the backend, tirelessly tagging images with metadata information and attributes that will help machines identify objects better.

An image annotation/labeling tool is a software that can be used to label images with metadata information and attributes that will help machines identify objects better.

Image labeling/annotation services are services offered by 3rd party vendors who label or annotate an image on your behalf. They offer the required expertise, quality agility, and scalability as and when required.

A labeled/annotated image is one that has been labeled with metadata describing the image making it comprehensible by machine learning algorithms.

Image annotation for machine learning or deep learning is the process of adding labels or descriptions or classifying an image to show the data points you want your model to recognize. In short, it’s adding relevant metadata to make it recognizable by machines.

Image annotation involves using one or more of these techniques: bounding boxes (2-d,3-d), landmarking, polygons, polylines, etc.